|

|

SALT (Speech Application Language Tags) is an extension of HTML and other markup languages (cHTML, XHTML, WML) that adds a powerful speech interface to Web pages, while maintaining and leveraging all the advantages of the Web application model. These tags are designed to be used for both voice-only browsers (for example, a browser accessed over the telephone) and multimodal browsers. Multimodal access will enable users to interact with an application in a variety of ways: they will be able to input data using speech, a keyboard, keypad, mouse and/or stylus, and produce data as synthesized speech, audio, plain text, motion video, and/or graphics. Each of these modes will be able to be used independently or concurrently. The full specification for SALT is currently being developed by the SALT Forum, an open industry initiative committed to developing a royalty-free, platform-independent standard that will make possible multimodal and telephony-enabled access to information, applications, and Web services from PCs, telephones, tablet PCs, and wireless personal digital assistants (PDAs). The following sections provide a brief overview of SALT and how to use it. For full details, please visit the SALT Forum site and download the current version of the SALT specification. |

|

Microsoft Speech Server with VoiceXMLVoiceXML is a special markup language designed to facilitate the creation of interactive voice response (IVR) services. It enables the creation of voice-based dialogs for telephone callers that feature the playing of speech prompts using pre-recorded and text-to-speech information, responding to spoken commands (via speech recognition) and touch tone inputs, and the recording of caller audio information. The version 1.0 specification for VoiceXML has itself also been submitted to the W3C as of March of this year, so it's looking like a standard that you can hang your hat on for at least awhile. The burning question with any new standard is of course how many vendors will actually adopt it and release products based on it. With the founding members of the VoiceXML forum being AT&T, IBM, Motorola, and Lucent, and with some 150 other companies as official members so far, VoiceXML is off to a good start. For more information about the forum, see http://www.voicexml.org. The official version 1.0 VoiceXML specification can also be downloaded from that site for free. |

Benefits of VoiceXML So why should you create IVR applications based on VoiceXML when existing IVR products work just fine? One significant element of VoiceXML is that it provides an intrinsic ability to access information stored on or accessed through a corporate web server. Since IVR systems generically require access to one or more corporate databases, any such database connectivity already implemented via a company web server is directly usable in a VoiceXML script. This saves development time and money and can greatly reduce maintenance costs. Another clear benefit is that existing web application development tools become mature tools for development of VoiceXML-based IVR applications. Using such tools and development methodologies also frees up IVR application developers from low-level IVR platform or database access details. VoiceXML applications by their very nature have excellent portability across web server and IVR platforms that properly support the standard. This means you are free to change to other VoiceXML-compliant IVR platform vendors without losing your development work. |

|

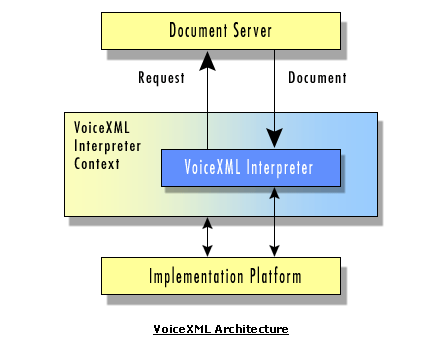

VoiceXML Architecture Here's a little about how VoiceXML works under the covers. As shown in figure 1, a document server (usually a web server) processes requests from a client application, the VoiceXML Interpreter, through the VoiceXML interpreter context. The server replies with VoiceXML documents that contains actual VoiceXML commands that are processed by the VoiceXML Interpreter. . The VoiceXML interpreter context may monitor caller inputs in parallel with the VoiceXML interpreter. For example, one VoiceXML interpreter context may always listen for a special touch tone command that takes the caller to a special menu, while another may listen for a command that changes the playback volume during the call. The implementation platform contains the telephone hardware and related CT resources and is controlled by the VoiceXML interpreter context and VoiceXML interpreter. For instance, an IVR application may have the VoiceXML interpreter context detecting an incoming call, reading the first VoiceXML document, and answering the call while the VoiceXML interpreter executes the first touch tone menu process after the call is answered. The implementation platform generates events in response to caller actions (e.g. touch tone or spoken commands) and system events (e.g. timers expiring). Some of these events are acted upon by the VoiceXML interpreter itself, as specified by the VoiceXML document, while others are acted upon by the VoiceXML interpreter context. |